The Criminal Justice Administrative Records System: A next-generation research data platform

[ad_1]

Administrative data systems are not necessarily built for research or statistical purposes. During operational use, administrative records get updated, overwritten, or deleted. These operational changes may not be fully documented and so research or statistical users may have little guidance in how to process the data. Due to variation in data collection methods and the diverse set of solutions that the CJARS team uses to harmonize criminal justice records, there is a fundamental need to benchmark the data infrastructure against other available data series to both validate the strengths of CJARS and to highlight its potential weaknesses to interested researchers.

The degree of data integration available through the CJARS microdata is unprecedented, creating challenges for ideal technical validation testing. Our validation efforts focus mainly on reproducing available aggregate statistical series published by the Bureau of Justice Statistics (BJS), with the implicit assumption that success in matching aggregate information bolsters the validity of the underlying microdata contained in CJARS as well.

We evaluate CJARS against federal statistical series, such as the FBI’s Uniform Crime Reporting (UCR) program3 and several BJS programs: State Court Processing Statistics Series (SCPS)4, National Prisoners Statistics Program (NPS)5, National Corrections Reporting Program (NCRP)6, Annual Probation Survey7, and Annual Parole Survey8. These programs share with CJARS a common set of demographic and criminal justice measures in a common set of state and local jurisdictions with considerable historical data to assess data quality over time. Our focus is to benchmark CJARS data at the state-level, rather than to aggregate across all CJARS states whenever possible. This reflects the decentralization of the U.S. criminal justice system, and allows us to better assess our data collection and harmonization practices. Our evaluation of arrest data against the UCR can only be made at local and county levels, so we omit it here. Please see the CJARS benchmarking report (https://cjars.isr.umich.edu/benchmarking-report-download/) for these findings.

Our analyses focus on reproducing caseload count and flow estimates (e.g., yearly entries into prison as measured in the NPS), as well as caseload characteristics and outcomes (e.g., demographic characteristics of defendants in SCPS data) in CJARS-covered jurisdictions. CJARS-based estimates that closely corroborate existing federal estimates provide important evidence on the quality and accuracy of our nascent data infrastructure endeavor and the population-level, linkable microdata from which the CJARS-based estimates are constructed.

Comparing CJARS adjudication to SCPS

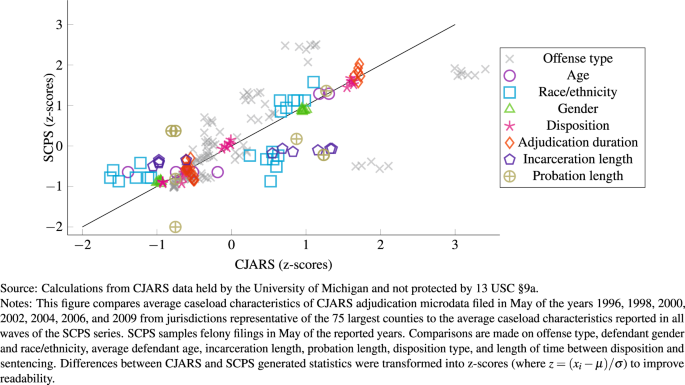

The U.S. lacks a comprehensive statistical reporting program on the criminal court system. The closest option we have for validating CJARS adjudication records is the SCPS program, an occasionally produced statistical series that documents characteristics of felony defendants from large urban counties. A number of common caseload composition and case processing metrics can be calculated using both CJARS and SCPS, creating the opportunity to benchmark the CJARS adjudication data for the subset of records that overlaps with the definition of the scope of SCPS. This still provides useful information on gauging the quality of the algorithms applied to all of our data. Examples include average age of defendants, defendant gender and race/ethnicity, disposition type, time between disposition and sentencing, probation and incarceration sentence length, and offense type.

Figure 2 provides a scatter plot where comparable SCPS and CJARS statistics (e.g., average age of felony defendants) are plotted onto the y- and x-axes, respectively. Individual statistics are plotted for each of the 1996, 1998, 2000, 2002, 2004, 2006, and 2009 waves of SCPS with corresponding CJARS statistics built from the same corresponding time frames. The color/shape of a marker in the scatter plot represents a specific outcome and are repeated across the survey waves. The expectation is that the plotted points will cluster around the reference line, which has a slope equal to one. Clustering around the line indicates that the statistics generated using CJARS and SCPS are comparable.

Table 1 shows formal tests of the difference between means calculated using SCPS microdata and means calculated using CJARS case data. The means have been calculated over jurisdiction-year cells, which represents a total of 609 jurisdiction-year combinations covered by each wave of the SCPS data. In order to account for differential temporal and jurisdictional coverage, the tests first residualize for event-year by jurisdiction fixed effects, meaning the tests evaluate the statistical similarity of caseload means between SCPS and CJARS within overlapping jurisdiction-year cells. Since jurisdictions are not resampled within CJARS, we cluster our standard errors at the jurisdiction level to account for their repeated observation over time. When we evaluate the null hypothesis that SCPS and CJARS-based measures are equal in the weighted regressions, none of the empirical tests are statistically significant at the level of p < 0.05. For one metric (violent offenses), we do reject the null hypothesis at the level of p < 0.1 (p-value = 0.059).

Comparing CJARS incarceration to the NPS and NCRP

CJARS incarceration records can be compared to similar data from the NPS and the NCRP. All three data sources contain information that can be used to estimate annual prison entry counts, exit counts, and populations as well as incarceration rates. For succinctness, we present comparisons here only for annual entry counts.

Figure 3 provides a comparison of annual entry counts as reported in the NPS and the NCRP, and from calculations using CJARS. A separate graph is given for each state for which CJARS has historical data holdings. In each graph, the purple line represents CJARS, blue the NPS, and green the NCRP. CJARS closely aligns with either the NPS, NCRP, or both in nearly every graph. For example, annual entry counts align well in Pennsylvania between all three data sources. Conversely, CJARS aligns better with either the NPS or NCRP but not both in, for example, Washington and North Carolina. A similar set of exercises that compare annual exit counts, year-end populations, and incarceration rates showing substantively similar findings can be found in the CJARS benchmarking report.

Additionally, we calculated the average absolute annual percent difference in the CJARS, NPS, and NCRP prison entry counts on a state-by-state basis. Across all years and states, CJARS entry counts differ from NPS and NCRP entry counts by an average absolute difference of 15.8% and 11.9%, respectively. In comparison, NPS and NCRP entry counts differ from each other by an average absolute difference of 16.1%–a larger discrepancy than CJARS has with either series.

Comparing CJARS probation and parole to the annual probation and parole surveys

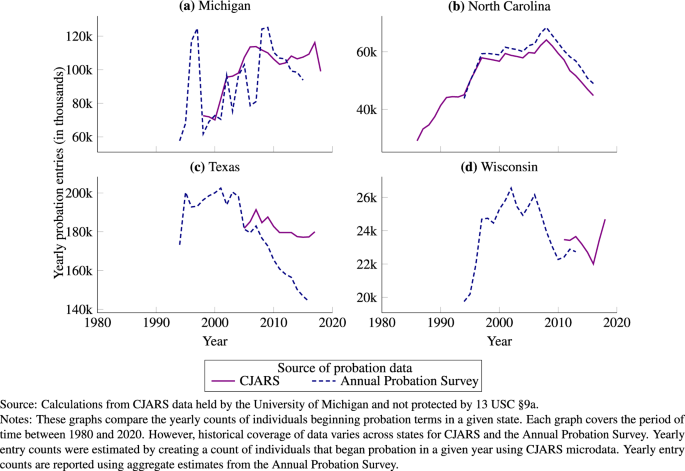

The probation information in CJARS provides information that can be compared to similar data from the Annual Probation Survey. Both CJARS and the Annual Probation Survey can be used to estimate yearly entry and exit counts, as well as yearly probationer populations and rates. Comparisons here focus on entry counts for succinctness.

Figure 4 shows a comparison between probation entry counts observed in CJARS as compared to the Annual Probation Survey for each state where CJARS has historical data holdings. The graphs show substantial alignment in North Carolina. There also appears to be good alignment in Michigan, but there is considerable instability from year-to-year entry counts in the Annual Probation Survey, leading to large increases and decreases. In comparison, the CJARS data from Michigan provide much more stable counts from year-to-year. The graph for Texas in Fig. 4 shows similarities when coverage in the CJARS data begins (in the early 2000s). However, a gap forms over time in which more entries are observed in the CJARS data. A similar set of exercises that compare annual exit counts, year-end populations, and probationer rates showing substantively similar findings can be found in the CJARS benchmarking report.

Additionally, we calculated the average yearly percent difference on a state-by-state basis between CJARS and the Annual Probation Survey in terms of probation entry counts. Then, we calculated the average absolute yearly difference across all CJARS-covered states and years to quantify the average difference in entry counts between CJARS and the Annual Probation Survey. Comparing CJARS to the Annual Probation Survey shows an average absolute difference of 14.4%.

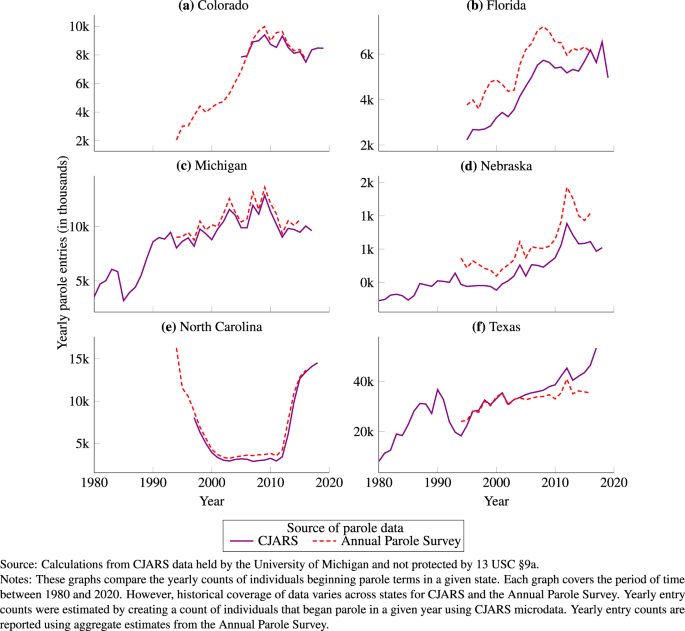

The parole information in CJARS provides information that can be compared against the same types of information gathered as part of the Annual Parole Survey. Both sources of parole data can be used to estimate yearly entry and exit counts, as well as yearly parolee populations and rates. Comparisons here focus on entry counts for succinctness.

Figure 5 shows a comparison between parole entry counts observed in CJARS as compared to the Annual Parole Survey for each state where CJARS has historical data holdings. As can be seen in this figure, entry counts in CJARS and the Annual Parole Survey line up exceptionally well in almost all states. The one state where there is a slight difference is Nebraska, where the counts of events in CJARS are slightly lower than those reported in the Annual Parole Survey. However, the difference is consistent across years, and so the trends of changes in entry counts over time align between CJARS and the Annual Parole Survey. A similar set of exercises that compare annual exit counts, year-end populations, and parolee rates showing substantively similar findings can be found in the CJARS benchmarking report.

Finally, we calculated the average absolute yearly percent difference on a state-by-state basis between CJARS and the Annual Parole Survey in terms of parole entry counts. Then, we calculated the mean difference across all CJARS-covered states and years to quantify the average difference in entry counts between CJARS and the Annual Parole Survey. Comparing CJARS to the Annual Parole Survey shows an average absolute difference of 15.7%.

[ad_2]

Source link